Price blindness costs retailers millions. Not in theory. In practice.

Every day, your competitors adjust thousands of prices while you’re flying blind. They optimize margins while you guess. They respond to market shifts while you analyze outdated spreadsheets. They win the algorithmic pricing game while you’re still learning the rules.

The hard truth: without real-time price intelligence, you’re making pricing decisions based on history, not reality.

But attempting large-scale price monitoring brings its own challenges. Your scrapers get blocked. Your IPs get banned. Your data collection stops entirely. Your competitive intelligence becomes swiss cheese – full of holes.

This is where residential proxies change everything. Not by bending the rules, but by working within them to deliver the continuous market visibility that modern retail demands.

The competitive price monitoring landscape

In today’s retail environment, price isn’t just a number. It’s a strategic lever that impacts every business metric that matters.

Modern retailers deploy price intelligence across multiple use cases:

Dynamic pricing optimization requires continuous competitor monitoring to maintain the perfect balance between market competitiveness and margin protection. Without reliable data streams, algorithms make dangerously uninformed decisions.

MAP compliance monitoring helps brands protect their value proposition by identifying unauthorized discounting. When monitoring fails, brand equity erodes through unchecked price violations.

Inventory and availability tracking provides early warning of competitor stock positions. This intelligence feeds both purchasing and pricing decisions, preventing inventory misalignment with market conditions.

Promotional analysis reveals competitor discount strategies and timing patterns. Without this intelligence, your promotion schedule operates in a strategic vacuum.

Private label comparison tracks how your products stack up against competing house brands. This intelligence drives both pricing and product development decisions.

The scale requirements are substantial. Enterprise retailers typically need to monitor:

- Thousands of SKUs

- Dozens of competitors

- Multiple geographic regions

- Daily (sometimes hourly) price changes

When price monitoring fails, the business consequences cascade throughout the organization. Pricing algorithms make wrong decisions. Marketing promotions launch at suboptimal times. Inventory positions become misaligned with market conditions.

The result? Profit margin erosion that NO retail operation can afford.

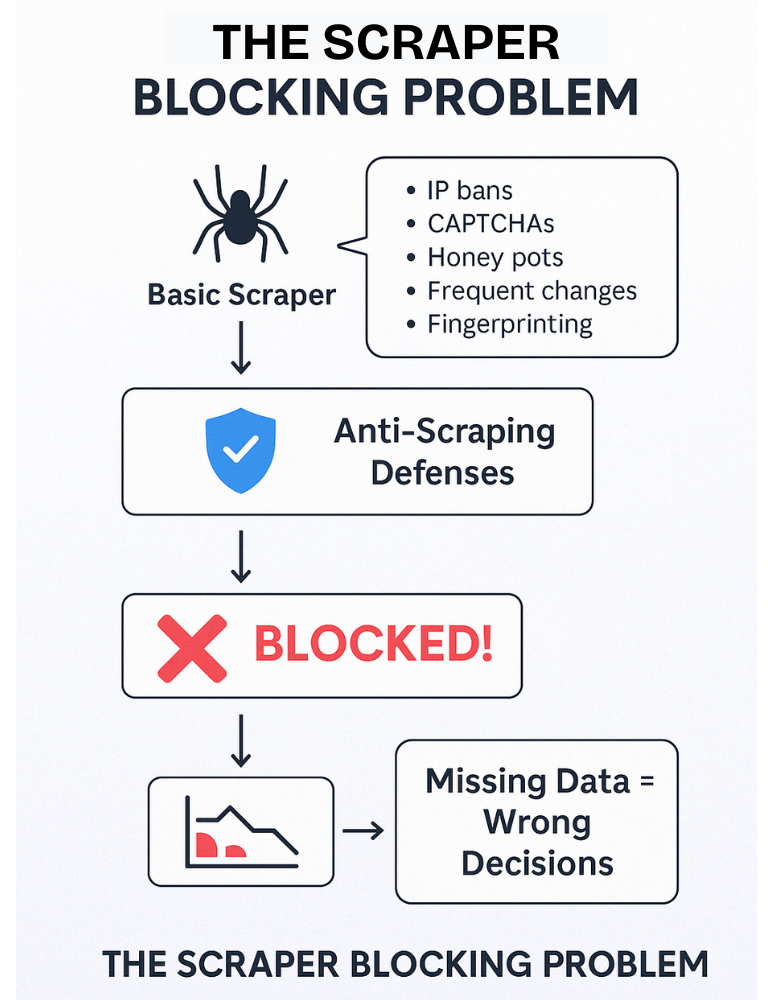

Why basic scraping fails

The days of simple HTML scraping are over. E-commerce platforms have evolved sophisticated defense systems specifically designed to thwart competitive monitoring.

Modern anti-scraping technologies employ multiple detection layers:

IP-based rate limiting identifies and restricts requests coming from the same origin. Basic scrapers hit these limits within minutes, not hours.

Browser fingerprinting goes beyond IP detection to examine device characteristics, creating a digital “fingerprint” that persists even when IPs change. This technology identifies scraping tools through their non-human configurations.

Behavioral analysis examines how users navigate sites. Scrapers typically follow patterns no human would – accessing pages too quickly, in unnatural sequences, or with machine-like precision.

CAPTCHA challenges target suspected automated tools, grinding data collection to a halt when human intervention isn’t available.

JavaScript challenges require browsers to execute complex code before displaying content. Most basic scrapers can’t render these challenges, resulting in incomplete or missing data.

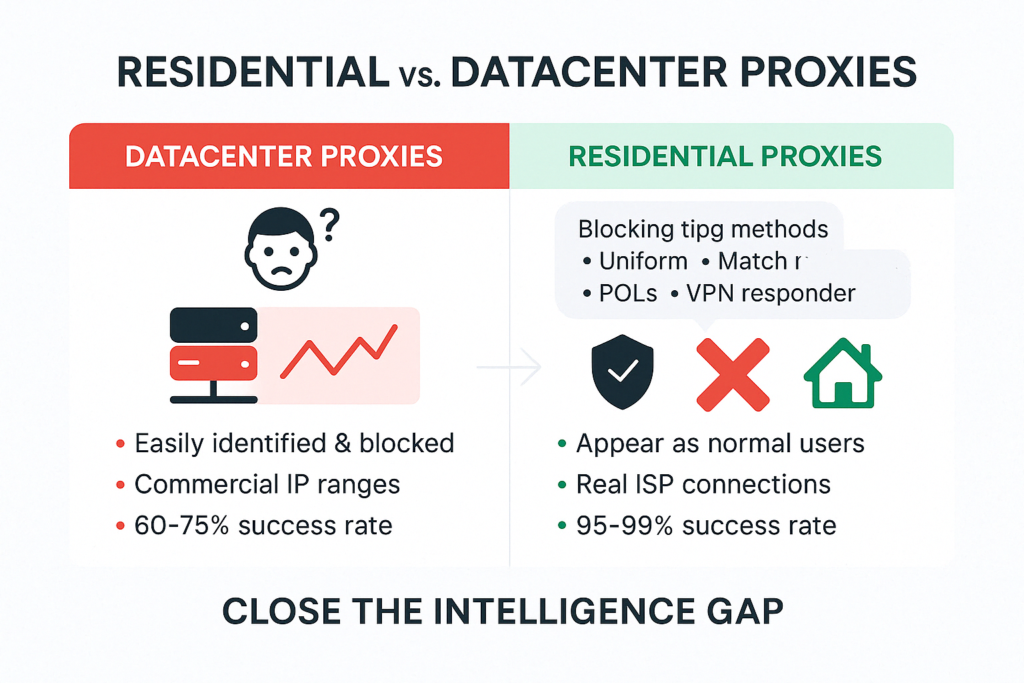

Standard datacenter proxies offer minimal protection against these measures. Their IP ranges are well-known and easily identified as non-residential traffic. Major e-commerce platforms maintain blocklists of datacenter IP ranges explicitly to prevent scraping.

A major sporting goods retailer learned this lesson the expensive way. They implemented price monitoring using basic datacenter proxies, achieving initial success until their entire IP pool was blacklisted simultaneously. Their competitive monitoring went dark for weeks while they scrambled for alternatives.

When monitoring fails, data gaps create dangerous blind spots. These aren’t just inconvenient – they’re operationally devastating. Imagine adjusting thousands of prices based on competitor data that’s 30% incomplete. The business risk is unacceptable.

Residential proxies are your essential infrastructure

Residential Proxies provide the foundation for reliable price intelligence by routing requests through real household connections assigned by legitimate Internet Service Providers.

Unlike datacenter proxies (which originate from obvious commercial hosting facilities), residential IPs appear identical to regular consumer traffic. This fundamental difference transforms scraping success rates from intermittent to consistent.

For price monitoring specifically, rotating residential proxies deliver four critical advantages:

Natural user profiles present normal browser fingerprints indistinguishable from legitimate customers. This bypasses the sophisticated fingerprinting techniques that immediately flag datacenter traffic.

Legitimate ISP relationships ensure the IP addresses aren’t on existing blocklists. When traffic comes from major consumer ISPs rather than hosting companies, it starts with a clean reputation.

Geographic distribution enables monitoring from the specific regions targeted in campaigns. This matters because many retailers show different prices based on visitor location.

Reduced blocking probability translates directly to higher data completeness rates. Enterprise implementations typically see success rates jump from 60-70% with datacenter proxies to 95-99% with properly implemented residential proxy networks.

For enterprise implementation, ethical sourcing isn’t optional. Legitimate residential proxy providers obtain proper consent from device owners, implement clear terms of service, and provide fair compensation models. This eliminates the legal and compliance risks associated with questionable proxy sources.

Integration approaches vary based on existing infrastructure, but most enterprises implement proxy management layers that handle authentication, rotation, and session management independently from the scraping logic.

Rotating vs. static proxies

The price monitoring proxy decision isn’t just residential versus datacenter. The rotation strategy fundamentally impacts monitoring capabilities.

Rotating residential proxies excel in specific scenarios:

Large-scale data collection operations monitoring thousands of products across dozens of sites demand distribution across many IPs to prevent pattern detection. Rotation creates the request diversity essential for avoiding rate limits.

Websites with aggressive scraper detection typically block IPs after relatively few requests. Rotating residential proxies ensure no single IP hits detection thresholds.

Geographic distribution requirements often necessitate proxies from multiple regions simultaneously. Rotating pools provide the necessary coverage without managing individual proxies for each location.

Static residential proxies prove optimal in different contexts:

Login-protected resources requiring consistent sessions benefit from stable IPs that maintain authentication across requests. This applies to vendor portals, distributor pricing systems, and membership-based e-commerce.

Trust-based access patterns emerge on some platforms that actually restrict access to new or unknown IP addresses. Static IPs build trust profiles over time, increasing access reliability.

Account-based monitoring requires consistent identity to prevent security flags that might lock accounts. Static IPs provide this consistency.

Most sophisticated retail intelligence operations leverage a hybrid approach:

- Static residential IPs for high-value, login-required resources

- Rotating residential proxies for broad market monitoring across public e-commerce sites

The optimal rotation strategy depends on target site sensitivity. Some operations rotate IPs with every request, while others maintain the same IP for specific session durations to appear more natural. The most advanced implementations use machine learning to determine optimal rotation timing based on target site behavior.

Building effective price monitoring

Successful price monitoring requires thoughtful system architecture extending beyond proxy implementation.

The core components include:

Proxy management infrastructure handles authentication, rotation rules, and health monitoring. This layer ensures optimal proxy utilization and provides fallback mechanisms when blocks occur.

Request distribution logic controls how scraping tasks get allocated across the proxy pool. Advanced implementations use adaptive distribution that responds to success rates across different targets.

Content parsing systems extract structured data from diverse page formats. These systems require ongoing maintenance as target sites change layouts.

Data normalization standardizes product information across competitors to enable accurate comparison. This layer matches products despite different naming conventions and specifications.

Exception handling protocols define responses to blocking events, CAPTCHA challenges, and site structure changes. Robust error handling makes the difference between partial and complete data.

Best practices for proxy implementation include:

Session management techniques that maintain cookies and state appropriately. Some systems require full session persistence, while others perform better with clean sessions for each request.

Request timing strategies that mimic human browsing patterns. The most effective approach randomizes intervals between requests to avoid obvious automation signatures.

Header manipulation creates authentic browser fingerprints. This includes setting appropriate user-agent strings, accept-language headers, and referrer information that matches legitimate browsing patterns.

JavaScript handling requires full browser rendering capabilities. Modern sites often employ JavaScript challenges specifically to block basic scrapers that don’t execute page code.

Scaling considerations depend directly on catalog size and competitor count. Most enterprise implementations start with a proxy-to-target ratio of approximately 10:1 – ten proxies for each target site being monitored. This ratio ensures sufficient distribution while maintaining cost efficiency.

The business case for proxy-powered monitoring

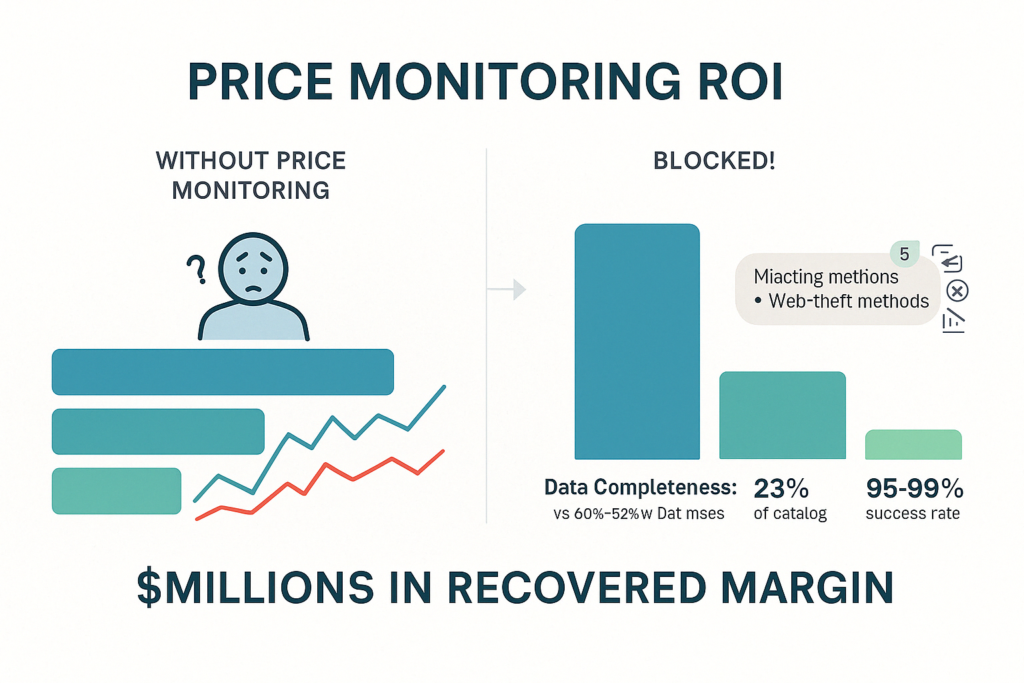

The investment in residential proxy infrastructure delivers quantifiable returns across multiple metrics.

Data completeness typically improves from 60-75% with basic approaches to 95-99% with proper residential proxy implementation. This completeness directly impacts pricing decision quality.

Monitoring reliability transforms from intermittent to consistent. This reliability enables true automation of pricing responses rather than manual interventions when monitoring fails.

Time-to-insight accelerates dramatically when data collection operates continuously rather than requiring restart after blocking events. In competitive retail categories, hours matter.

The financial impact manifests across business functions:

A specialty electronics retailer implemented residential proxy-based monitoring and discovered 23% of their catalog was priced at least 12% above competitor prices without any offsetting advantage. Correcting just these pricing outliers increased conversion rates by 3.1% and revenue by 4.5% – delivering seven-figure annual impact.

A sporting goods manufacturer identified MAP violations across 18% of their distribution network using comprehensive monitoring. Enforcement actions recovered an estimated $3.2M in brand value erosion and protected margin integrity across their authorized dealer network.

An office supply retailer’s inventory strategy benefited from early competitor stock position intelligence, allowing them to secure high-demand product allocations before stockouts appeared publicly. This intelligence directly increased capture of substitute product revenue during a competitor’s inventory shortage.

The typical enterprise implementation costs include:

- Residential proxy access: $3,000–8,000 monthly depending on scale

- Monitoring platform: $5,000-10,000 monthly for enterprise solutions

- Integration engineering: $15,000-30,000 initial implementation

- Ongoing maintenance: 1-2 dedicated technical resources

Against a mid-sized retailer’s revenue, these investments typically show ROI timeframes of 3-6 months through direct margin improvements and competitive advantage.

Future price monitoring innovations

The price intelligence landscape continues advancing beyond basic monitoring.

Machine learning integration now enables predictive pricing models that anticipate competitor changes before they occur. These systems analyze historical patterns to forecast pricing strategies.

Natural language processing addresses the product matching challenge by understanding context rather than relying on exact specification matching. This capability dramatically improves monitoring accuracy for complex products.

Visual recognition extracts pricing from images rather than just text elements. This capability overcomes attempts to obscure pricing in non-textual formats.

Integrated competitive response systems automatically adjust prices based on monitoring insights without human intervention. These closed-loop systems operate on seconds/minutes timescales rather than traditional daily/weekly adjustments.

Organizations implementing price monitoring today are laying the foundation for these advanced capabilities, which all depend on reliable, continuous data collection.

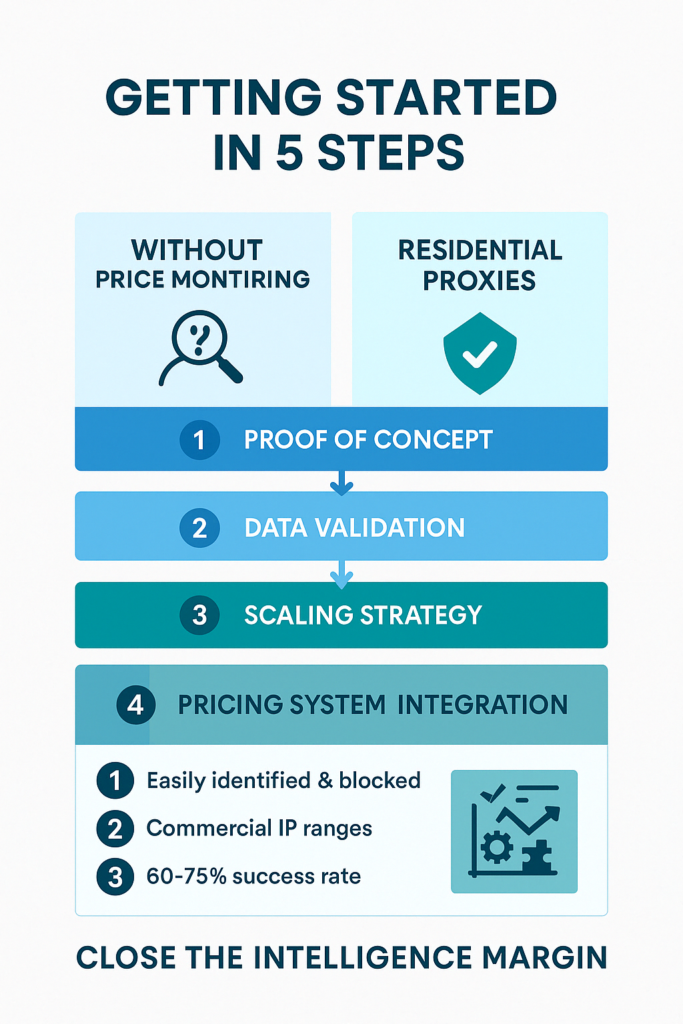

Getting started

Organizations new to proxy-based price monitoring should follow a structured implementation approach:

- Provider selection focusing on geographic coverage, ethical sourcing, and technical support capabilities. IPBurger offers industry-leading Residential Proxies coverage across 195+ countries with the technical infrastructure necessary for reliable retail monitoring.

- Proof of concept development targeting a limited product set across 3-5 key competitors. This focused implementation validates both technical approach and business value.

- Data validation comparing proxy-collected data against manual verification. This step ensures collection accuracy before making business decisions based on the intelligence.

- Scaling strategy that expands coverage methodically across the catalog. Most organizations prioritize high-margin, highly competitive categories first.

- Integration with pricing systems to transform monitoring from interesting intelligence to actionable input for pricing decisions.

The implementation team should include technical resources who understand web scraping challenges, business analysts who can validate data quality, and pricing specialists who will ultimately use the intelligence.

The price intelligence imperative

In today’s retail environment, price intelligence isn’t optional. It’s essential.

The question isn’t whether you need competitive price monitoring. It’s whether your monitoring will work when it matters most.

Residential proxies – properly implemented, ethically sourced, and strategically deployed – transform price intelligence from aspiration to operational reality. They bridge the gap between what retailers want to know and what they can reliably collect.

In a market where prices change by the hour and competitors constantly test boundaries, complete visibility isn’t just advantage. It’s survival.

FAQs

How many proxies do I need for effective price monitoring?

For enterprise retail operations monitoring 20–30 competitors, a starting pool of 200–300 residential proxies provides sufficient distribution. Scaling follows a general rule of 10 proxies per target site, adjusted based on site sensitivity and request volume.

Can’t I just use free or cheap proxies for price monitoring?

Free and ultra-cheap residential proxies create three critical problems: unreliability (frequent downtime), poor performance (high latency), and serious security risks (many free proxies intercept and analyze traffic). Enterprise price intelligence requires properly sourced, reliable proxy infrastructure.

How do I prevent getting blocked when monitoring competitor prices?

Prevention requires four key elements: residential proxies that appear as legitimate users, proper rotation strategies that prevent pattern detection, request timing that mimics human browsing behavior, and full browser fingerprinting that passes JavaScript challenges.

Is using proxies for price monitoring legal?

Yes, when implemented properly. Collecting publicly available pricing information is legal in most jurisdictions. However, implementation must respect terms of service regarding access methods, avoid excessive server load, and never attempt to access protected information requiring authentication (unless explicitly authorized).

How fresh is proxy-collected pricing data?

With proper implementation, data freshness is limited only by collection frequency. Enterprise systems typically refresh competitive pricing every 4-24 hours depending on category volatility, with critical products sometimes monitored hourly during promotional periods.

How does IPBurger ensure residential proxy quality for price monitoring?

IPBurger Proxy maintains a 100M+ residential IP proxy pool across 195+ countries with stringent quality controls. Their proxy infrastructure includes automatic health monitoring, rotation management, and session control capabilities specifically designed for retail intelligence applications.

Can my competitors tell that I’m monitoring their prices?

When properly implemented with private residential proxies, monitoring activities appear identical to normal customer traffic. Without inside access to their security systems, competitors cannot distinguish well-executed monitoring from regular site visitors.